Measuring Celebrant Performance

/08

by © Jennifer

Cram - Brisbane Marriage Celebrant Originally published as Do You Like Me? How Much Do You Like Me? in The Celebrant, Issue 6, December 2020, pp 14-23

Republished 06/12/2022 with the permission of the Editor.

Categories: | Published Article | Celebrant |

How do you judge your performance as a celebrant? What do you rely on to give you an indicator of your relative success? Do you have an active performance measurement system in place? Is that system information-seeking or merely compliments-eliciting? Are you asking questions that will stroke your ego or ones that will point the way to areas where improvement or change is needed? Or are you using surrogate measures of success?

The only way to ensure that the data we are using will give us the information we need is to have a good performance measurement system in place. Unfortunately, it appears to me that our industry is very prone to using flows (data about traffic, such as numbers of ceremonies booked, numbers of followers, number of likes) and feel goods (good report cards in the form of formal and informal feedback) as indicators of how good we and our services are and how satisfied our clients are. We may also ignore or discount external factors that can lead us to erroneous conclusions.

Implementing a good performance measurement system is not a simple undertaking in a service environment. It is particularly difficult in an environment such as the wedding industry in which there is a complex interaction between emotion, tradition, social expectations, and legal requirements together with little regulation.

In Australia, for example, marriage celebrants are regulated by the Federal Attorney General’s Department. Yet there is no requirement for celebrants to have any performance assessment regime in place. While authorized marriage celebrants are required to adhere to a Code of Practice, that code has never required celebrants to implement systematic performance measurement. The previous version of the code merely required that celebrants ‘accept evaluative comment from the parties, and use any comments to improve performance’. That minimal requirement is no longer in the current version, which only requires that marriage celebrants inform marrying parties about how to make a complaint.

Client Satisfaction Data

is Not Enough

out

Getting a good report card from clients may be

satisfying, but it does little or nothing to assist

in accurately assessing the extent to which services

fall short of customer expectations. Nor does it

provide information which can be used to assess

where problems may lie or what needs to be done to

fix them. When we get a good report card the natural tendency is to assume that our clients are satisfied because we provided a great service and therefore we are being presented with evidence of cause and effect. Realistically, it is likely that all we are seeing is an association.

Let me give you a couple of examples of how easy it is to jump to conclusions about cause and effect:

- I’m told that, between 1970 and 1985, at the same time the stork population in the German State of Lower Saxony was declining, so was the birth rate. Does that prove that storks bring babies? Of course not.

- Some years ago my local council loudly trumpeted the fact that installation of new water meters throughout the city had resulted in a 25% reduction in water usage, which seems totally logical until you look at the weather records for the period and realise that there was so much rain over the period that we had had quite widespread flooding.

- Whether feedback is sought via written means (eg an email or a feedback form) or in a conversation can make much as a 12% difference in reported satisfaction levels

- How the question is framed, positively or negatively, will affect the answer

- Timing also makes a difference

- There is a great deal of research that suggests that clients find it difficult to respond negatively to customer-satisfaction surveys, possibly because they chose to use that particular service or service provider.

What clients expect of celebrants is likely to be driven by a complicated mix of the client’s prior actual and/or vicarious experience of similar services, or of services they perceive to be equivalent (including church services and services conducted by registrars or marriage officers working in courts and registry offices) and what they have been led to expect from and by you.

It gets worse. Celebrants often complain that clients won’t or don’t respond to requests for feedback, and that too few of them fail to provide testimonials or do reviews. We have responded to this by embracing the not-uncommon practice of using surrogate ways of assessing whether and how much clients like us. The big three of surrogate measures in our industry are Bookings, Turnover/Earnings, and Referrals.

Bookings/Number of

Ceremonies Officiated

out

The celebrant industry seems to regard number of

ceremonies performed to be a figure that is not only

a measure of relative success, but also one that

comes with boasting rights. The higher the number,

the greater the success. In reality this figure is

merely a workload statistic that can be influenced

(or manipulated) by factors that have nothing to do

with service quality.The Bottom Line

out

Related to bookings as a measure, the bottom line is

a measure of income. Obviously that is affected by

your pricing. Like bookings, it can be impacted by

external factors over which you have no control and

which have absolutely nothing to do with the level

of service you deliver. (Need I mention COVID?)

Gross income may look good, but it is net income

(i.e. what’s left over after expenses) that is an

indicator of how successful your business is. Your

net income may be artificially inflated by the way

you identify, assess, and document expenses. Trend

information – the bottom line trending up or down

may flag that something is going on, but without

data, and detailed analysis of that data, you won’t

know what that might be. And there is no guarantee

of which way your bottom line will go next year,

next month, or even next week. While The Bottom Line

can reveal how good your are at running your

business, and how much in demand you might be, it

cannot shed any light on the quality of your

services or how good you are at doing your job. Referrals

out

Referrals are an ambiguous performance measure. You

have to look at who recommended you and why they did

so. Why people recommend a particular business or

individual, or indeed why they make recommendations

at all, is immensely complicated. It is often

clouded by a personal agenda that has nothing to do

with perceived quality, an agenda that could be

anything from convenience to control, and all points

in between.You also have to take into account who they recommended you to. Clients who have booked you because someone recommended you can be influenced by who made the recommendation. If the venue they booked recommended you, they may feel obligated to book you. If someone with whom they have a personal relationship or someone they see as powerful, influential, or of higher social status did so, they are more likely to book you and to express higher satisfaction with your services, regardless of their experience with you. To do anything else would create doubts about their relationship with the referrer. In such cases clients are biased towards assuming that their less than satisfactory experience was the exception, or that they somehow lack the knowledge or skills to accurately assess your services, so they will express satisfaction, regardless. Or it might simply come down to not wanting to admit to anyone, even themselves, that they made a less than optimal choice.

Gap Analysis

out

You can’t adequately assess your own performance if

you don’t collect data, interpret and use that data

to evaluate your performance, and take action as a

result of identifying areas for improvement or

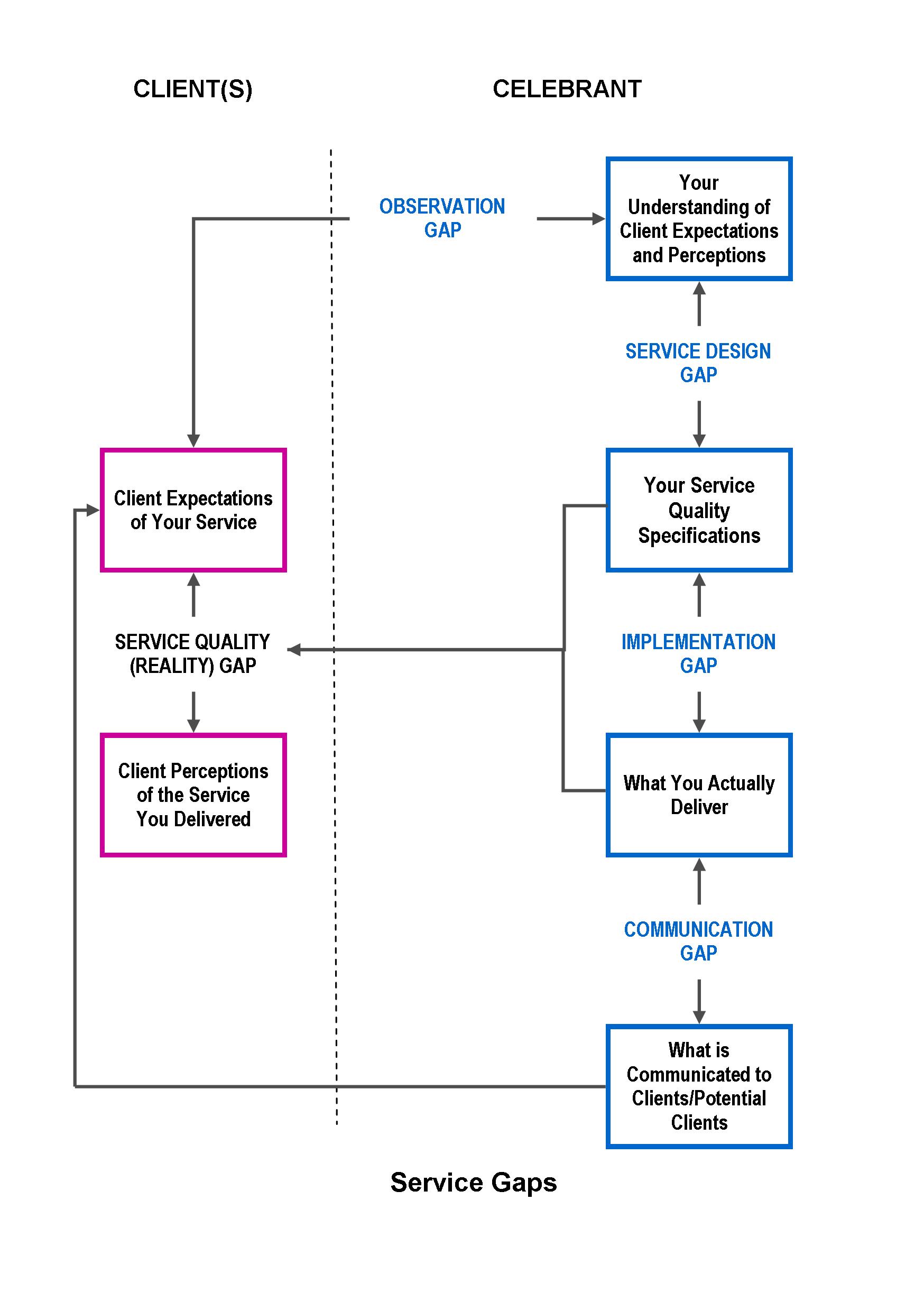

change. I’m a rusted-on fan of service-gap analysis because it not only highlights that any difference between what you think you deliver and what you actually deliver will create a gap between what your clients expected and how they perceived what you delivered, which will impact on how satisfied they actually are with your service, but will help identify why.

All gaps impact on service quality.

- A Service Design Gap occurs when you select inappropriate or inadequate service designs and service standards. For example: Does the way you’ve designed your services mesh with your ideal client?.

- An Implementation Gap occurs when you do not deliver to your service standards or you haven’t appropriately implemented your systems

- An Observation Gap occurs when you are either not aware about what clients/potential clients actually expect, or you have erroneous beliefs about what they think we offer. While, if my experience is any indicator, and there is plenty of anecdotal data to suggest it is, part of our job as celebrants is to correct misconceptions, we also need to look at what clients feel we should offer, rather than only at what they think we would/will/do offer. This means we should look beyond client expectations to their wants, their feelings, their values, their attitudes and their beliefs and at the same time examine our own expectations, wants, feelings, values, attitudes, and beliefs. How you frame what you believe their expectations are will have a huge influence on how you assess your own performance.

- A Communication Gap occurs when performance doesn’t match promises

One word of warning. You can’t effectively model the customer perspective solely from your point of view as a service provider. This is where a methodology I invented in libraries can come in handy. I called it the One Question Survey. I would request my staff to discuss a particular topic with as many people as possible over a period of a week or two. The beauty of this approach is that, because the information sought is part of a conversation, you tap into sub-texts that more formal mechanisms miss - the totality of client’s reactions, needs, and opinions and the reasons for their responses.

Such conversations are a much better source of information about what you need to do to improve the quality of your services than virtually any other form of data gathering, for the simple reason that they close the Communication Gap. Once you really understand what your clients expect, you can take action to make sure that you are effective in the way you address any service quality issues gap analysis identifies.

Thanks for reading!

pullin0 Things